Redux in 2025: A reliable choice for complex React projects

Every few months, a shiny new state management library shows up promising to ‘finally replace Redux.’ And yet… here we are in 2025, and Redux is still standing, sipping its coffee, watching the new kids sprint by before tripping over their own APIs. Why hasn’t it faded away? Because Redux nailed four fundamentals that never really go out of style: predictability, observability, scalability, and architectural clarity. Pair that with React’s unidirectional data flow, and you start to see why Redux keeps hanging around. The question is: can the new wave of tools really do better, or are they just remixing the classics?

Magical reactivity

Since the beginning of time, several UI framework authors have been trying to make the UI automagically respond to changes in application state. My first encounter with this concept was Knockout.js, but a myriad of different frameworks and libraries have attempted to solve this puzzle in their respective ways. Typically this has been done through getter/setter traps or more recently, Proxy objects, in an effort to abstract away all dependency tracking and change listeners of any kind.

// Examples of reactivity with Proxy objects in Vue 3

import { ref, reactive } from "vue";

const x = ref(0);

const y = reactive({ foo: true })

// getters to intercept read ops

x.value;

y.foo;

// setters to intercept write ops

x.value++;

y.foo = false;One of the more modern variants to this kind of reactivity abstraction comes in the form of Signals. In my experience, SolidJS was the first to do these well, although somewhat preceded by Vue 3’s composition api.

// Examples of reactivity with Signals in SolidJS

import { createSignal } from "solid-js";

const [getValue, setValue] = createSignal(0);

getValue(); // intercepts read ops

setValue(); // intercepts write opsOthers followed suit: Svelte with runes, Preact signals, Qwik state and also Angular introduced signals. There is even a stage 1 TC39 standard proposal for Signals. This is a clear indicator that signals are generally perceived very well. Presumably, this positive perception of signals is due to their simplicity and speed.

While I was never fond of the concessions required to make automagical reactivity possible with an ergonomic api surface, the broader web community seems to appreciate it. One example of such a concession is that using object destructuring (a native language feature) is discouraged because it breaks proxy behavior. To make automatic dependency tracking possible, the developer needs to props.foo instead of { foo } = props.

import { createEffect } from "solid-js";

// Reactivity proxy in props is lost because of destructuring in SolidJS

function MySolidComponent({ foo, bar, baz } /* <- destructured props */) {

// foo bar baz are now static values, and will not be tracked by effects, computeds, etc..

createEffect(() => {

console.log({ foo, bar, baz }); // will not log when values change

})

}import { reactive, computed } from "vue";

// Reactivity proxy in a "reactive" object in Vue is lost because of destructuring

const { foo, bar, baz } = reactive({ foo: 0, bar: 1, baz: 2 });

// qux is computed once, and not recomputed when values change

const qux = computed(() => foo + bar + baz); Maybe I am nitpicking. However, there are more real world consequences to signals that I want to address.

State management chaos

Anyone who has wrestled with RxJS (or observables at scale in general) knows how nightmarish it can be to reason about or debug a reactivity chain buried inside a huge web of observable interdependencies.

While rxjs observables and signals are not the same thing, conceptually they occupy the same problem space: modeling data that changes over time, and propagating updates automatically through the system’s dependency graph.

Signals, however, take a different approach than observables. They act like reactive buckets: you can synchronously get or set their value at any time, and they automatically notify subscribers of those changes. Unlike observables, dependency tracking in signals is implicit. It happens whenever a signal is read. This implicitness makes the system feel simple at first, but also less transparent and harder to predict. Even more so than observables.

// Preact signals and effects

import { count, double, effect } from "@preact/signals";

// creates a proxy object that intercept read ops on its `value` property

const count = signal(0);

// computed automatically tracks `count` as its dependency when `count.value` is read,

// by eagerly evaluating the callback function

const double = computed(() => count.value * 2);

// effect also automatically tracks `count` as its dependency when read,

// executing the callback function every time count changes

effect(() => console.log(`Count is ${count.value}`));As your codebase grows, that hidden complexity can accumulate until the very simplicity and developer experience you traded scalability for, begins to erode.

Unidirectional dataflow and explicit state changes

React’s unidirectional dataflow takes the opposite approach. Instead of hidden dependencies, React applications express data flow explicitly: state flows downward, events bubble upward. The produced UI is a function of your state. State goes in, UI comes out. Commonly expressed as: UI = f(state)

This model is less magical than signals and can feel more verbose in practice, since developers must deliberately decide where to place state, when to memoize, and when to run side effects. But the benefit is predictability, as cause and effect are easier to see.

// useState returns the current state and a setter function from an internal closure

const [count, setCount] = useState(0);

// React has no concept of a "computed" property.

// Data flows down into this component on every update,

// hence we can simply do something like:

const double = count * 2;

// `count` is explicitly tracked as a dependency of this effect;

// the effect runs when count changes

useEffect(() => console.log(`count is ${count}`, [count]);

// event bubbles up, state is updated and flows back down into this component

<button onClick={() => setCount(count => count + 1)}>ohRedux extends this concept by making events explicit through the use of an “action” data type. Actions describe what happened, and are object literals like { type: "something happens" }. These actions are handled by “reducer” functions; reducers define how state changes based on an action, and can be expressed as (state, action) => state. Any update to application state in Redux is triggered by dispatching an action, which is then reduced to produce the next state.

// a "slice" of redux state

const countSlice = createSlice({

name: 'counter',

initialState: { count: 0 },

reducers: {

// the "increment" method name is used by redux toolkit as action type: `{ type: "increment" }`

incrementBy(state, action) {

// the next state is produced, using the current state and the action payload

state.count = state.count + action.payload;

}

}

});You may have noticed: Redux shares the same unidirectional data flow model as React. User events trigger actions, which are processed by reducers to produce the next state. That new state then flows downward through your application, updating the UI.

Let’s contrast a Redux action with a Signal update, using a SolidJS store.

const [state, setState] = createStore({ count: 0 });

setState("count", (count) => count + 1);An obvious advantage is that this is simple: Minimal syntax, no ceremony. But also harder to trace/debug at scale, no global “action log.”

In Redux, updates happen through a formal, global, serializable event:

{ type: 'increment' }It’s more verbose, but powerful for large apps and tooling. With Signals, updates happen through a direct function call setState(...) buried in component logic. It’s ergonomic, but lacks the centralized predictability of Redux.

Time-travel and middleware

Because actions are plain serializable data and reducers are pure functions, the Redux store’s state is fully determined by initialState + sequence of actions → currentState.

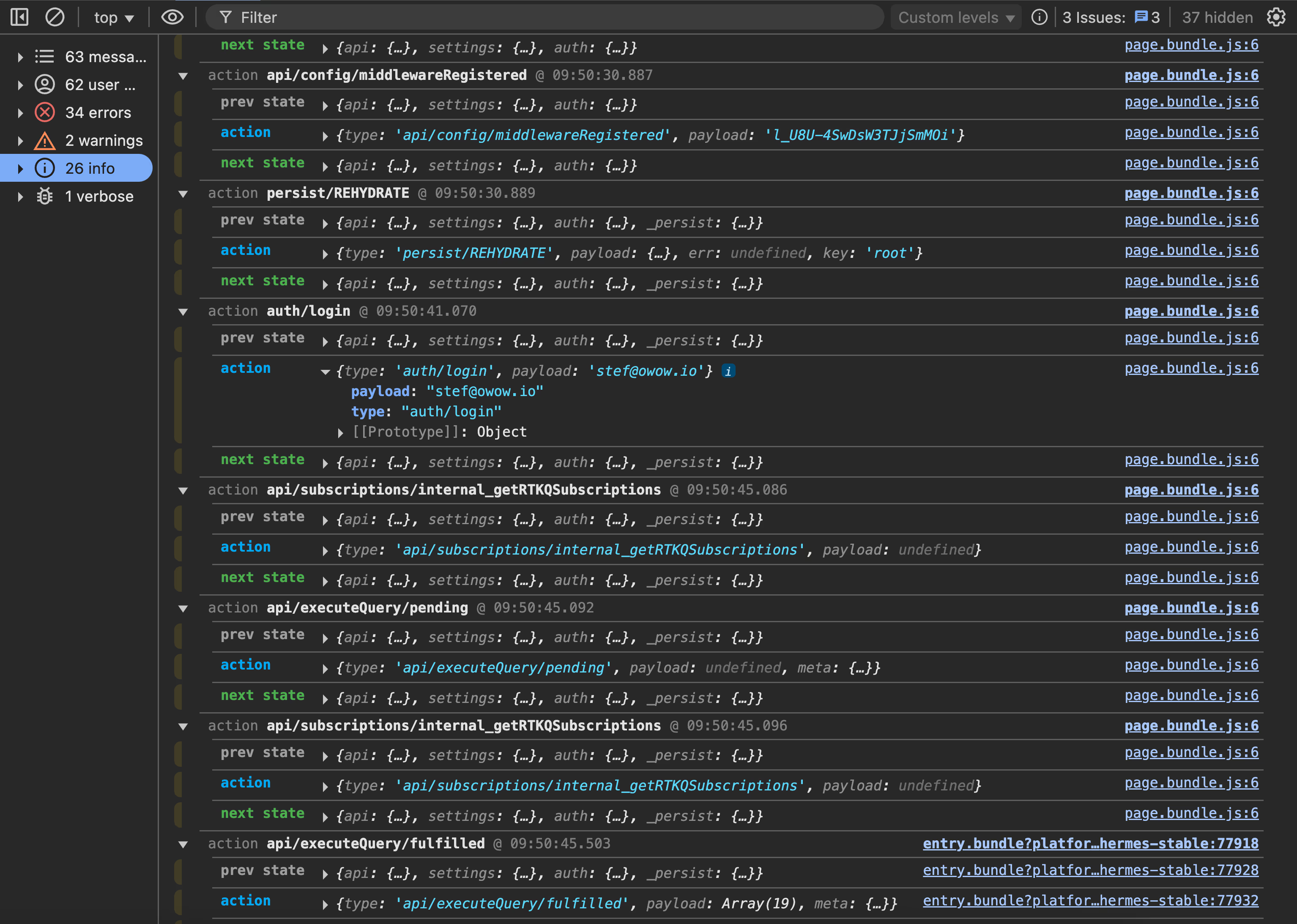

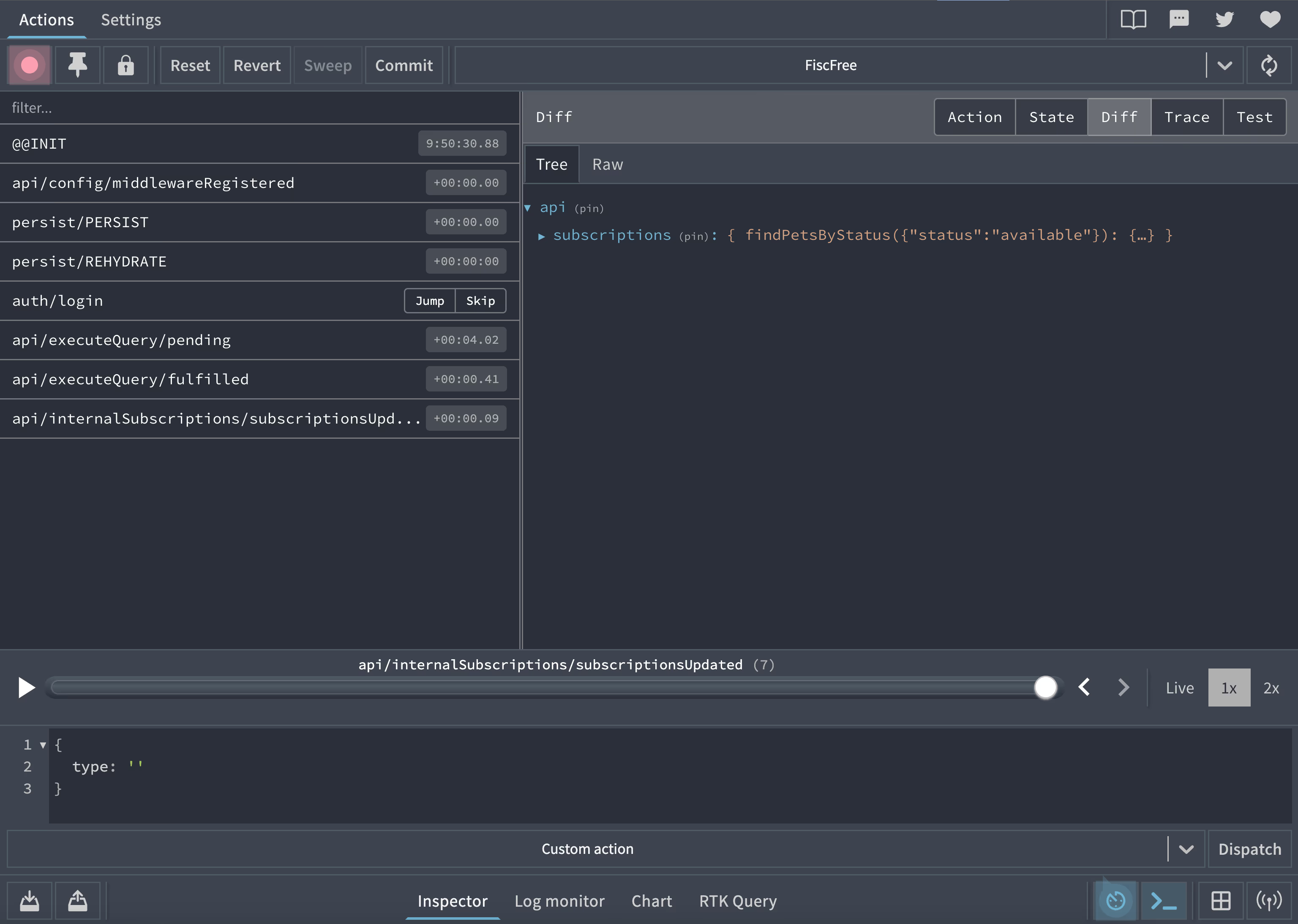

That determinism is precisely what enables Redux DevTools logging, replay or “time travel” debugging features.

Every time you call dispatch({ type: "something happens" }), Redux produces a new state by passing the previous state and the action into a reducer. Since actions are just plain, serializable objects, the DevTools can log them, store them, and even send them across the network without losing information. And because reducers are deterministic, replaying the same sequence of actions against the initial state will always yield the exact same result.

This predictable relationship is what makes time-travel debugging possible. The DevTools record each dispatched action along with the state before and after it. That lets you step backward and forward in time, skip certain actions, reorder them, or replay a bug sequence so you can see exactly how the app arrived at its current state.

Architectural clarity

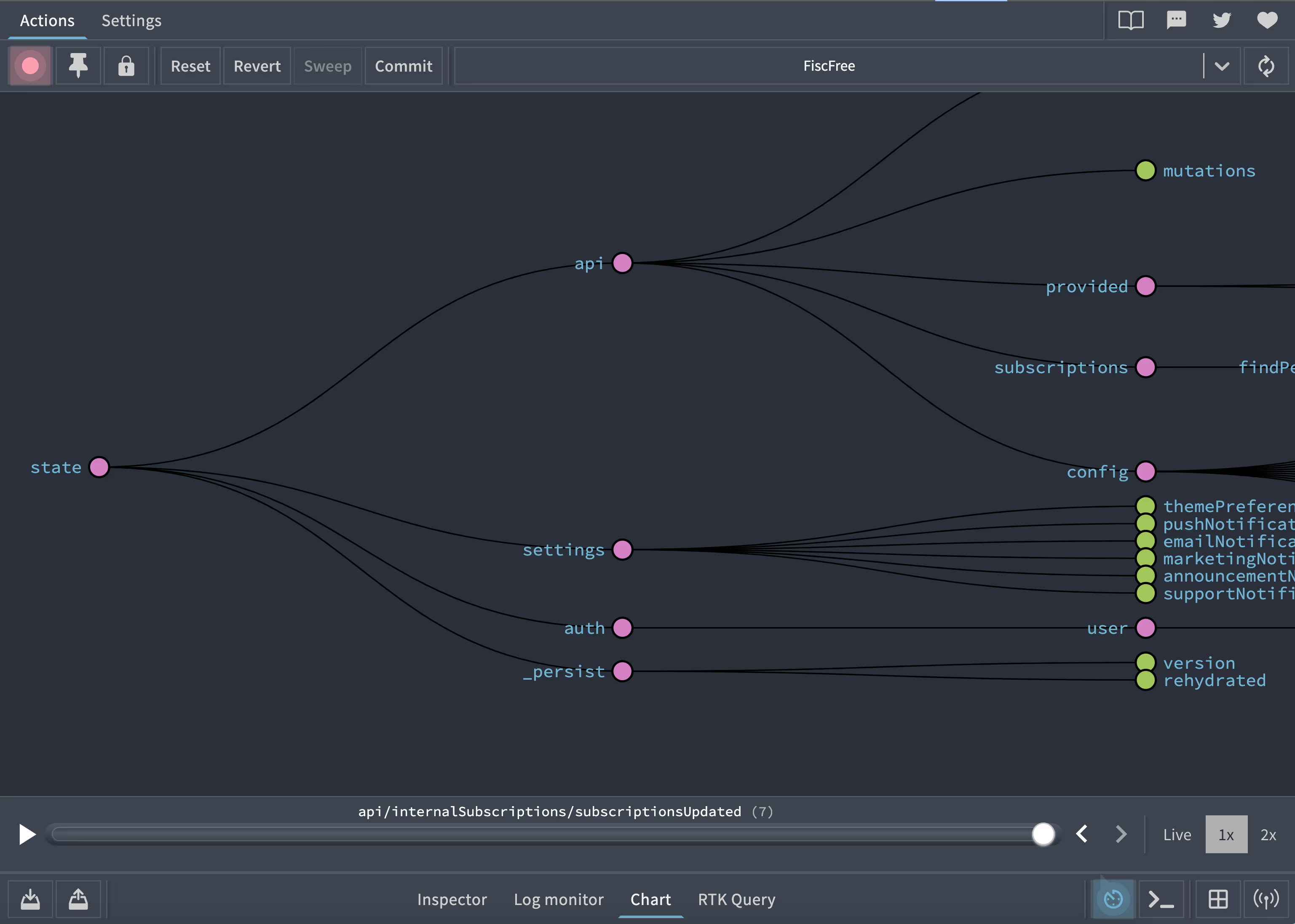

Redux deliberately splits responsibilities across actions, reducers, and components, so each piece has a single, well-defined job. To reiterate: Actions are just descriptions of what happened in the app, plain objects that say “a user clicked a button” or “data finished loading.” Reducers take the current state plus an action and return the next state, without worrying about how that state will be displayed. Components then focus solely on rendering the UI based on the current state and dispatching actions in response to user input.

// counter-slice.ts

const countSlice = createSlice({

name: 'counter',

initialState: { count: 0 },

reducers: {

incrementBy(state, action // <- what happened) {

state.count = state.count + action.payload; // <- next state

}

}

});

export const { incrementBy } = countSlice.actions;// component.tsx

import { useDispatch, useSelector } from "react-redux";

import { incrementBy } from "./counter-slice.ts";

function Counter() {

const dispatch = useDispatch();

const count = useSelector(state => state.counter.count);

return <>

<p>Count is {count}</p>

<button onClick={() => dispatch(incrementBy(1))}>

Increment

</button>

</>

}Because this separation is built into Redux’s design, the patterns are enforced rather than left to developer discipline. It also makes refactoring safer, because changing how state is structured doesn’t require rewriting all your components or event handlers. Finally, it improves reasoning: when something breaks, you know whether to look at the action creator, the reducer logic, or the component, because each one has a single concern.

This enforced separation of concerns is what gives Redux projects architectural clarity and long-term maintainability.

The Future: Redux’s Lasting Influence

Redux may no longer be the shiny new tool everyone is rushing to adopt, but its design continues to echo across the frontend ecosystem. The core ideas of a single source of truth, pure state transitions, and unidirectional data flow have shaped how newer libraries think about state. Tools like Zustand (although more ergonomic but less deterministic) and Jotai look much leaner on the surface, but if you dig deeper you’ll see Redux’s fingerprints: plain actions, predictable updates, and a focus on transparency.

The Case for Redux

When you zoom out, four pillars define why Redux has stuck around: predictability (state is just data and reducers are pure), observability/debuggability (thanks to time-travel and action logging), scalability (a single store and enforced patterns help teams grow without chaos), and architectural clarity (actions, reducers, and components each have one job).

It isn’t a question of old versus new. Frameworks and compilers evolve, and lighter state libraries come and go. For example, using something like Zustand or Pinia, or React’s built-in Context + hooks in smaller projects, might be simpler. What endures are the principles that Redux put on the map: unidirectional data flow, strict separation of concerns, and the guarantee that state transitions are traceable.

Final thought

In a world of fleeting trends, React and Redux’s principles are timeless. Whether you’re building a small app or a massive platform, unidirectional data flow gives you the confidence to scale without fear.

Do you still use Redux in your projects? Why or why not?